Curious about the ever-evolving world of A/B testing and conversion rate optimization? Lead Developer, Jeremy Sell, is here to answer some frequently asked questions on the subject. Have even more questions? Drop us a line!

What is A/B testing, and what can it help me achieve?

In development, people often refer to A/B testing synonymously with the whole process of testing. But the definition is much more narrow. A/B testing is defined as comparing two versions of a web page or app against one another to determine which one moves the needle on a predetermined metric which is usually a conversion rate. Whether you are a lead marketer or a CTO, you are always looking to increase your ROI. Testing helps you get there by helping you improve your key metrics and create a better user experience across your site.

How is multivariate testing different than A/B testing?

As discussed above, A/B testing is comparing two components against one another. In multivariate testing, we might be comparing multiple pieces of a web page against each other. Making multiple changes requires that we isolate the changes so that one winning change does not lift up another change that is either neutral or negative on conversion. Therefore, when running a multivariate test we might be testing two new headlines, adding similar products to a page, and creating a space for reviews to be more visible. In this case, running A/B tests could take months to collect enough data to pass the first round. Then we would have to test how they all play together. Multivariate testing instead puts all of the changes together in all possible variations and releases those simultaneously. The math in this case would be [# of changes for component 1] x [# of changes for component 2] x [# of changes for component 3] .

- Two new headlines + original headline = 3

- Adding similar products + original without = 2

- More visible reviews + original = 2

- 3 x 2 x 2 = 12 variations

When is A/B testing a good idea? When is it a bad idea?

Good Idea: When you have a clear hypothesis with with measurable metrics and traffic high enough to reach statistical significance. Testing can almost always make your user paths cleaner and more clear.

Bad Idea: There are a lot of times testing can be not only bad, but actually harmful to your site. This can be the case when declaring winners before proving statistical significance. Another trap is choosing to increase traffic during a test to prove statistical significance quicker. This leads to unusual traffic and can produce false positives. To me, the worst cases involve not being able to implement wins after tests produce positive results.

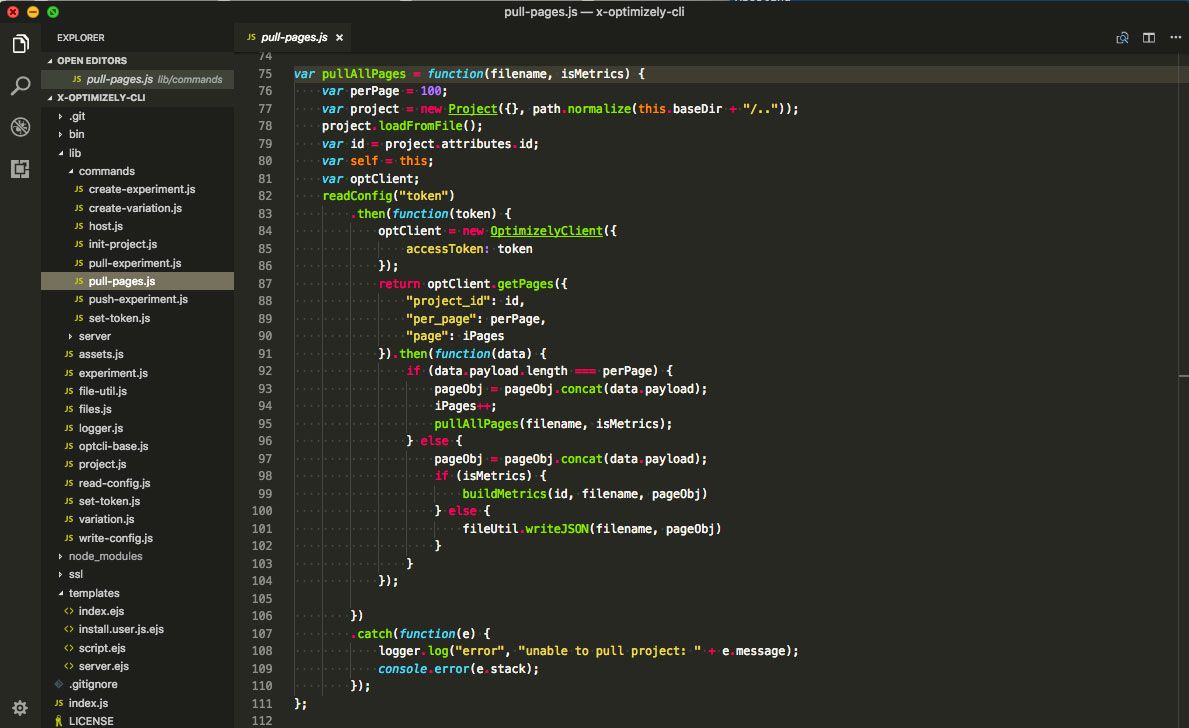

What testing platform should I use?

This depends wildly on budget and the other technologies you rely on. I also wrote a whole blog post on this!

How many variables can there be in one test?

How much time do you have? If you have multiple variables then you will have to run multiple variations. This number increases quickly if each of those variables have multiple variations. See the answer on multivariate testing for more on that math.

How many tests can I run at a time?

This is a tricky question. Let us say the testing is on an e-commerce site with 3 tests on different sections of the site, and the main metric for all tests is revenue increase. In this case each test is affecting the hypothesis for the other tests, which is increased revenue. In this case you may want to run mutually exclusive tests to avoid data pollution. Then again, running mutually exclusive tests means you will wait longer to predict a winner. Sometimes it is more important to prove out winners and move the needle on conversion rates than it is in being 100% accurate on all the tests individually. This varies by situation wildly and how quickly results are needed.

How do I set up tracking to make sure I’m collecting valid and meaningful data?

For basic usage, the built in tracking is fine, but should be tested to make sure the analytics are flowing in correctly. For more robust analytics I would recommend using Google or Adobe. All testing platforms choose winners based upon Bayesian Theory or something close, and having an outside source where you can track user behavior can be invaluable. What happens when your test fails? Can you dig in and look at the users in variation 3 to see what other paths they might be taking in your experience? Google and Adobe offer this and the ability to break down your data to really understand your experiment, whether winning or losing.

How long should I run tests, and when can a winner be declared?

You need to reach statistical significance. There are calculators for this here, here, and here!

What is ‘statistical significance’?

This is the likelihood that the difference is significant enough to overcome any randomness between the baseline and variations. For any test to be declared a winner, it needs to overcome a percentage that could be attributed to random differences. This number gets smaller based upon the number of users within an experiment.

What if a test performs poorly?

Dig into the analytics and learn! Losing tests can teach you about what users are looking for. We had a test where we built out a signup form for a college within a larger page that had links to informational pages. The form lost in conversion to the original that was on a stand alone page. What we found was users who completed the form were more likely to have looked through the informational pages and became more qualified leads for the sales team.

What should I do with a winning test?

Implement it! I wrote a whole blog post on this one, as well.

How do I encourage my team to get onboard with A/B testing?

Each department can be encouraged in different ways. Mainly, testing gives you an opportunity to better understand your users needs on a regular basis and offer a product that aligns more closely with their interests in what you are offering.

Written by Jeremy Sell, Senior Experience Engineer