Go Back

We here at roboboogie love to test. It’s exciting to blend the scientific with the creative to discover a better path toward conversions. Sometimes the most difficult part of optimization is not finding ways to improve ROIs or KPIs, but it’s the implementation of winning tests.

Why implementing your wins is so important

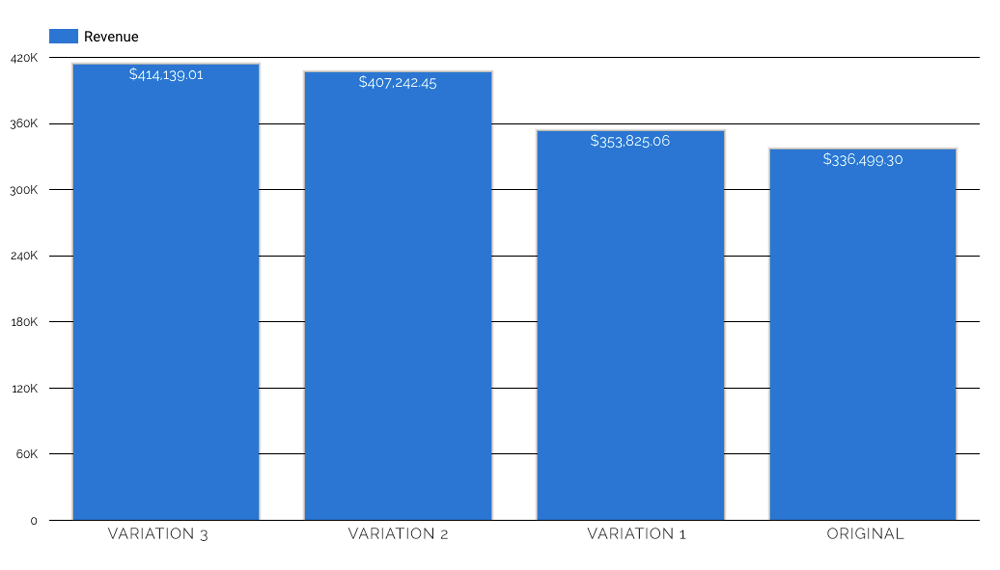

We recently wrapped a test with three variations that made related products available directly from a user’s cart. We learned a ton about user behavior from this test and we were able to grow revenue in each variation by $17,326, $70,743 and $77,640 respectively in right around 5 weeks. Each variation was allocated 25% of the total traffic including the baseline.

So you’re probably thinking that these types of tests are generally shipped off and developed and launched within the hour, right? I mean, every week a customer could be losing up to $60,000! Sadly, too often winning variations never get integrated into a site. Why? Almost always this is because there is no clear path toward implementation before testing began. Testing is the goal and making those tests into a feature of the site is an afterthought.

Create a clear path to implementation

By failing to implement our wins, we make iterative testing more difficult and costly on site performance. Once we determine a winner, we are looking to maximize our effect on users by running smaller tests on winning variations to key in on the most impactful changes. Those slight variations need to alter only a small percentage of the previous winner, but without a previous test being implemented those small changes could bring a large baseline of code from the previous test. This baseline shared across multiple variations creates a larger testing snippet as well as more places where errors could crop up and hinder a test’s results.

The most common roadblock we see is a separation between departments. The testing department is usually spearheaded, surprisingly, not by the development team, but by some combination of marketing and sales looking to find conversions and engagement. The dev team often has a backlog of features to build or bugs to fix. Finding time for developing a winning test not on the roadmap can be tricky. That’s why it’s imperative that prior to testing, all departments that are needed to develop and deploy a winning variation understand their responsibilities and have resources available for development, QA and launch. Optimization and testing needs to be a company-wide buy in.

Steps for success

Strangely, securing budget can stand in the way of implementation even with a clear ROI win. The best processes we’ve seen from our customers usually includes budget for implementing winning variations prior to testing. Sometimes this can be tricky as there is no data to back up the proposal; therefore, testing teams are then stuck trying to secure a budget for new tests and implementation of old tests at same time. Testing is more exciting, proves future budget in a simple way, and usually takes precedence if only one budget can be secured. In order to safeguard the implementation of successful test results, keep the following in mind:

- Secure budget for implementation immediately

- Get all departments needed on board before you start

- Implement early and implement often

If you are working with an optimization partner, like roboboogie, there are a couple ways to smooth this transition to site integration. Both involve offering access to a larger portion of your codebase. If your partner has the ability to build tests into your actual site and trigger those tests via Optimizely’s REST or JavaScript API, then the losing variations can simply be removed from the page and all you’re left to do is QA and launch. This approach can be difficult while your in house development team is potentially changing those same pages, but will save the most time. The second approach is to offer enough of your site so that your optimization partner can rebuild the winning variation to be as close to compatible as possible, leaving your in house development team to make small edits before QA and then launch. Both approaches will significantly lighten the load for your internal team.

Not integrating your wins is like buying new cabinets to remodel your kitchen and then leaving them in your basement. You’ve spent real money and probably would have a better product upon finishing the job. Creating a clear path toward implementation will help you and your team increase your metrics which provides a clear case for continued testing. So secure budget for implementations, know your development team’s availability or have your optimization partner on board to build winning variations, and get all groups involved to buy into the science of testing. Good luck!

Written by Jeremy Sell