Go Back

Who doesn’t love the idea of adding a new feature to their website to enhance customers’ experiences and make more money?

It could be a new survey that helps users find the right product that you offer. A pop-up modal or banner that connects users with promotions or offers. A new feature could be new payment options like Apple Pay, payment plans or an extended warranty or coverage plan like those offered by Clyde.

All of those sound like features designed to make your customers happy and to make you more money. Perfect!

Introducing new elements or features to your digital experience can be a fantastic way to address customers’ wants and needs. New features can lead to improved customer satisfaction, and can also be a means to driving the bottom line revenue and conversion goals of your business.

Sounds great, right? Create the feature, roll it out, and count the money and high fives from happy customers.

But before you flip the switch on the new feature, let’s stop and consider a strategic approach – testing into new feature rollouts.

While new features start with the best intentions of providing an improved customer experience and helping businesses achieve their goals, the way the changes fit into the existing user experience can be more complicated than expected, and in some cases can have drastically negative consequences.

1. Why Should I Test Into a Feature Roll Out?

Let’s start with the why? Changes to your website or digital experience start with an assumption – or in optimization parlance, a hypothesis. The hypothesis for a new feature can often be as simple as, “By rolling out feature Y (a pop-up modal with recommended products), we will see an increase in clicks on add-to-cart and will lead to an increase in average order value.”

It sounds great, but how do we validate that our assumption or hypothesis is correct? And how do we ensure that the new feature doesn’t create a negative experience for users or negatively impact the metric we are trying to improve?

For example, users can’t figure out how to close the pop-up and a large percentage of those users abandon the shopping experience without purchasing. Yikes!

While this would be a very undesirable outcome of your initial test, this data is invaluable in improving the user experience. And because the experience is only presented to a small number of visitors, you haven’t negatively impacted ALL of your potential buyers.

Testing into a feature rollout allows you to strategically introduce your new feature to a small number of users and compare the impact of the new experience to your existing experience. This approach allows you to launch your feature with confidence, and with the data to validate your decision to roll out.

Let’s take a look at what this approach looks like in practice.

2. Identify the Feature and Define Your Measurement of Success

Along with establishing your hypothesis, you should also establish your measures of success.

Defining success removes ambiguity in the feature’s impact and allows you to objectively evaluate the performance of your new feature. These metrics for success can be anything that you can use to measure the effectiveness of your changes. In eCommerce, we often look at increases in add-to-cart rate, average order value, or alternatively, a decrease in cart abandonment or a decrease in exit rate in the shopping cart.

We recommend taking note of all of the metrics you consider and sharing them with your analyst as they are all potential KPIs for your testing plan.

Let’s look at an example where we are trying to address low conversion rates on our eCommerce website. We design and develop a new way to serve promotions to users on our site using a pop-up with an enticing, personalized offer. In this case, we have decided our main measure of success is the conversion rate for users who saw the pop-up when compared to users who did not see the pop-up. This is our main measure of success.

Features are not always focused on conversion rate. We can also look at improvement in clickthrough rate, increased promotional redemptions, increased revenue attributed to marketing, increased add-to-carts for promoted products, decreased cart abandonment, total revenue lift, or something else entirely depending on your unique factors.

The key here is that knowing your measurement of success before you start will remove ambiguity in evaluating a test, regardless of how successful it is.

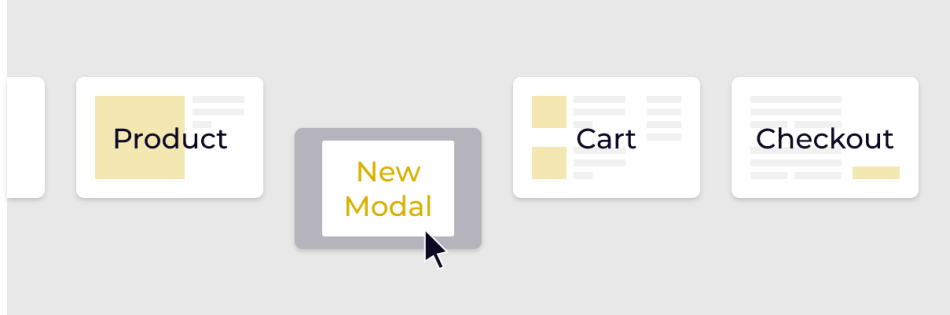

3. Identify Your Testing Variables

Once you have success defined, it’s time to evaluate the variables in your current experience or in your new feature that will likely contribute to a successful rollout.

Most often, these variables are going to fall into the categories of design and development. This is where having a team of experts who understand testing and testing strategy is vital. We recommend including an optimization strategist, UX/UI designer, and experience engineer or developer in the conversation as you identify your testing variables and evaluate the potential impact on the user experience, as well as the impact on scope and timeline.

When evaluating the variables with your experts, you can think about the visual UI design of the feature, when and where in the user experience the feature is presented to users, and how the feature impacts a user’s journey through your site with their non-linear, real-world behavior.

From a visual perspective, we also recommend evaluating how the presentation of a new feature impacts and reflects your brand. Does the new feature look on-brand? Is the messaging consistent with what your customers expect? Does the type of feature resonate with your customer base?

As you evaluate the UX and UI options and start crafting visual solutions, we recommend communicating with the engineer who will be building and launching your test, to ensure the experience can be built the way you envision it without causing technical issues. Experienced engineers can also provide strategic input from a technical perspective and identify potential technical hurdles that could hurt the user experience or make for an ineffective test.

4. Design, Build, and Run Your Test

With your hypothesis in place, your success metrics defined, and your variables isolated, it’s time to design, build, and run your test.

If A/B testing and conversion rate optimization are everyday practices at your organization, this process is likely in place. If you’re newer to testing and optimizing and diving in with a feature roll-out – congratulations! There are a few common pieces of technology that you’ll need in place to easily run and monitor your tests.

You need a testing platform – this is the tool that will allow you to display your new feature. Tools like A/B Tasty, Convert, Google Optimize, Kibo, and many others allow you to run your tests and determine the number of users who will see your new feature and the number of users who will see the previous experience.

These tools integrate with almost every website. If you aren’t sure where to start, we can help!

You need an analytics tool or tools. Accurate measurement of your tests is critical. The good news is that there are many great options for reliable analytics, including Google Analytics, as well as the reporting tools included with some of the testing platforms we’ve mentioned above. We also recommend a digital experience analytics tool with session replay like FullStory, which allows you to quickly identify user pain points and frustrations.

With your design, build, and testing platform in place, it’s time to run and monitor the test. Your optimization strategist should be the one to determine how long a test should run to achieve statistical significance and the percentage of users who should see the test variation.

5. Analyze and Iterate

As tests conclude, it may be tempting to simply look at your success metric and move on. However, it’s important to ingest and analyze as much of the data as possible to uncover insights that may lead to valuable iterations.

I know we said “test” (singular), but there is a lot of opportunity to iterate and run multiple tests as you refine the experience surrounding your new feature and maximize its impact on your goals and your customer experience.

Alternatively, you may find only a small impact, a significantly insignificant impact, or a negative impact when you run a test. That doesn’t mean it’s time to throw the feature out with the bathwater. That’s when it’s time to dive into deeper analysis to understand what did and didn’t positively connect with your visitors.

While this process can take time and it’s certainly not a magic wand, we’ve found incredible success in sticking to the test-and-optimize methodology with our clients. The fact is, by testing into a new feature you mitigate the risks of rolling out a new feature to your entire audience and give yourself the opportunity to roll out an optimized version, backed by data!

6. Implement with Confidence

Congratulations, you have developed a new feature that you know performs to your expectations as well as your users! You have the data to back it up, and a baseline for further optimization, if you want to ideate and improve the feature further.

Additionally, as you have been conducting testing on your real users, they have likely already been exposed to the new feature, resulting in easier adoption and stronger, more consistent performance.

While this testing methodology can take time and planning, it is a way to confidently roll out new features, functionality changes, and user experience changes rooted in data. Taking a test-and-optimize approach to your digital experience as a whole creates near limitless opportunities for digital transformation and optimization.

If you aren’t sure how to get started with testing or want to consult with the best in the business – send us an email and we’ll set up a meeting with you!